Agile Data Warehouse Development and Testing

Photo by Joshua J. Cotten on Unsplash

One of the greatest data management and data warehouse challenges I faced, was while working as a designer and DBA of a multi-terabyte Oracle project for a Tier 1 Investment bank. The project encompassed over a hundred designers, developers, and testers, all running in three parallel development streams, capped off with several System, and User Acceptance Test (UAT) projects in parallel. It was a nightmare to manage.

One of my responsibilities was to help design the procedures to manage fifteen multi-terabyte warehouse environments, ensuring everyone was running with the correct code version, database changes were correctly applied, and every platform loaded with the correct data.

As you can imagine, it was a titan configuration management challenge, made especially difficult because it took over two days to backup and deliver a copy of the PROD database for testing. I accepted that database version management was difficult, but it was made exponentially more challenging because of the huge data volumes involved.

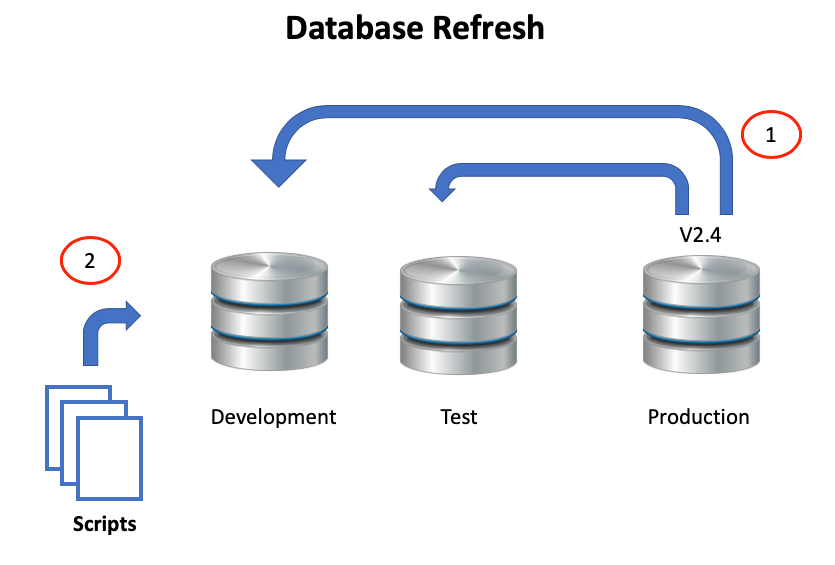

Figure 1: Refreshing the Database from Production

Figure 1 above illustrates the starting point, which involves copying the Production database back to the Development and Test platforms to give a consistent starting point for the next cycle. On a large volume data warehouse system, this can be an enormous challenge.

Once every database is at a consistent point, the next phase of development database changes are applied, and the test cycle can begin.

Figure 2: Database Change Management Process

Figure 2 above illustrates the standard development process, whereby changes on development are promoted first to a test database, then finally to the production system.

While the above process is logical and normally works well, it has several drawbacks – especially when deployed on a traditional on-premises system: -

It is very slow: Because of the time taken to copy data from Production to Test. This means, it's impossible to quickly restore the database after a test cycle, which in turn, often limits the validity of testing.

Multiple Dedicated Servers: Even to support a simple process, it potentially needs several database platforms to support Production, UAT and Integration Testing in addition to Development.

Duplicate Data Copies: Each database server needs a replica copy of the production data which can run to hundreds of terabytes, and significant cost. The alternative method of reducing data volumes involves delivering cut-down copies of the data, but this process can be equally difficult on large data volumes, and further extends the refresh time.

Data Replication Effort: Each database needs to be periodically refreshed from the production copy, and on a large system this can be a huge challenge in terms of both time and effort. Ideally, test databases would be refreshed even more frequently, perhaps at the end of every test cycle, but that’s simply not feasible.

Performance Testing: Given the potentially huge capital cost of a full-size production database, it’s normal for the Development and Test databases to be significantly smaller than production to save money. This means it’s almost impossible to perform any meaningful performance testing. A significant risk when working with large data volumes.

Snowflake Zero Copy Clones and Time Travel

The Snowflake Data Warehouse addresses the above challenges using Zero Copy Clones and Time Travel which provide the ability to clone a table, a full schema or even an entire multi-terabyte database within minutes. Even better, using the Enterprise edition, you can create a clone from any point in time up to 90 days in the past.

create database prod_copy

clone prod_db

at (offset => 3600);To demonstrate the speed of taking a clone copy, the above SQL statement was executed on a SMALL virtual warehouse to clone a multi-terabyte warehouse as it was exactly an hour ago. The entire process completed in under ten seconds.

Time travel includes the ability to query the database exactly as it was, at any point up to 90 days ago. This includes the ability to: -

Query by timestamp: Using SQL to return transactionally consistent results at a given point in time, down to within a millisecond.

Query by Statement ID: To clone a table just before a given SQL statement was executed. This may be useful, for example, to restore the database at a point just before a batch update operation which corrupted the entire database.

Restore a Database: Using a clone operation, to take an instant database snapshot from any point in the last 90 days, and restore the database within seconds.

Recover a Database: Using the UNDROP command to instantly restore an accidentally dropped database, schema or table.

Create an updatable clone copy: For example, to support development or testing operations, by creating an instantly available, full database clone which is available for insert, update or delete operations, completely independent of the source. You can even add additional columns to a cloned table.

Maintain multiple independent clones: To support multiple parallel database environments at potentially zero additional storage cost. This can be used to create an unlimited number of clones, and run parallel testing while paying only for the subsequent data changes.

Database Backup and Restore

“Nobody needs to perform a backup! You need to be able to recover from data corruption.”

DBAs frequently point out the importance of performing database backups, but in reality, nobody needs to perform a backup. In reality you need to be able to recover from data corruption, and yet in traditional on-premise databases, a backup can take hours to complete, potentially consume compute resources, and a restore operation can be even longer.

Using a combination of Snowflake Zero Copy Clones and Time Travel, it’s possible to freeze a clone of the production database as it was up to 90 days ago, then recover from data corruption with a simple swap operation.

alter database prod_backup

swap with prod_db;The above SQL statement performs a database level swap which effectively restores the existing UAT database back to the point it was when the production clone was taken. On a multi-terabyte database, this was almost instant.

Agile Data Management with Snowflake

Using a zero-copy clone, we can reduce the time to deliver a fully working test database from days to minutes or even seconds. Whereas on traditional systems, it is simply not feasible to refresh data after each cycle, on Snowflake it is possible to refresh an entire database within seconds. The exact same process can then be repeated for development.

Figure 3: Agile Database Development with Snowflake

The diagram above illustrates the sequence to prepare a database ready for testing.

Clone Production: To produce a UAT copy. This should take the version of the database as it was 7 days ago, to support replaying historical data loads.

Apply Database Upgrade Scripts: To create the new and modified tables ready for testing.

Replay ELT Loads: Which involves re-executing the ingestion process against a known starting point. This applies the data changes for the past 7 days, effectively replaying the past weeks data load against the new solution.

Compare Results: Which involves comparing the existing production database with the new version. The only differences should be as a result of the upgrades and changes.

The above process can be repeatedly executed at the end of each testing cycle, and can apply all database environments including UAT, System and Performance Testing.

Using this method, it’s possible to create, test and replace the development and test databases within minutes, to support a rapid and agile test cycle. Furthermore, because each clone adds no physical storage, every developer can have their own full-size development database, reset within minutes on demand.

Finally, as it’s possible to deploy a full-size virtual warehouse within seconds, it’s possible to execute full strength performance testing against a full-size database, then simply close it down when not needed. As compute resources are charged by the second, a full size performance test is now possible.

Data Anonymization

One of other challenges faced by data warehouse projects involves the need to anonymise production data for development purposes. This may involve either removing or masking sensitive data.

Figure 4: Handling Sensitive Data with Snowflake Cloning

The diagram above illustrates the best practice approach for management of anonymized data. This involves a two-stage process: -

Anonymise the data: Which involves duplicating the source tables to create an anonymized copy, removing or replacing sensitive data as appropriate.

Clone the copy: Which produces one or more zero copy clones of the anonymized data. As each clone adds zero additional data storage, this adds no additional storage cost.

Using this method, multiple development and test database clones can be taken at zero additional cost from the anonymized copy.

Conclusion

One of the biggest challenges when working in an agile manner on data warehouse projects is the time and effort involved in replicating and physically transporting data for development and test cycles. When combined with the cost of hardware, storage and maintenance, this can be a significant challenge for most projects.

Using the Snowflake Zero Copy Clone and Time Travel features simplifies the process, and makes it remarkably simple to instantly replicate data with zero processing or additional storage cost.

Notice Anything Missing?

No annoying pop-ups or adverts. No bull, just facts, insights and opinions. Sign up below, and I will mail you when new content is available. I will never spam you or abuse your trust. Alternatively, you can leave a comment below.

Disclaimer: The opinions expressed on this site are entirely my own, and will not necessarily reflect those of my employer.